Data quality ETL process

Goal

Implement a data warehouse ETL loading process which will perform data quality and consistency tests and generate reports with records that do not pass the validation rules. This topic describes how to perform basic data cleansing tasks using any ETL tool.

Data quality

The data quality process includes such terms as data cleansing, data validation, data manipulation, data quality tests, data refining, data filtering and tuning. It is a crucial area to maintain in order to keep the data warehouse trustworthy for the business users.

An appropriate definition of the data quality criteria will ensure that the quality of the data is measured, analyzed and subsequently improved. The data quality management in an organization requires great involvement from the business side and very often a lot of manual interventions.

ETL plays a major role in data cleansing and data quality process as it helps automate most of the tasks outlined above.

Scenario details

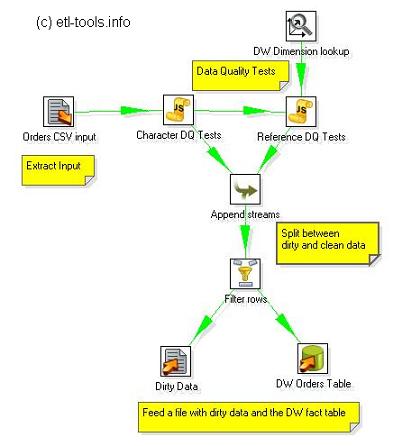

The data warehouse is fed daily with an orders extract which comes from a source OLTP system. Unfortunately, the data quality in that extract is poor as the source system does not perform much consistency checks and there are no data dictionaries.

The data quality problems that need to be addressed are identified using two types of Data Quality Tests: syntax and reference tests.

The syntax tests will report dirty data based on character patterns, invalid characters, incorrect lower or upper case order, etc.

The reference tests will check the integrity of the data according to the data model. So, for example a customer ID which does not exist in a data warehouse customers dictionary table will be reported.

Also, both types of tests report using two severity levels: errors and warnings.

When an error is encountered, the record is logged and not passed through to the output.

Warnings are logged, however still loaded into the data warehouse.

The data quality problems in this example are as follows:

Solution

The idea is to capture records containing invalid data in a rejects file for further data quality inspection and analysis.

In practice, the ETL process design in any of the comercial ETL tools will be pretty straightforward and will include an input, a validation step and two outputs: validated records and dirty data.

The two validation transformations (Character and reference DQ Tests) depicted below will do the following:

The following variations will be reported as warnings: (0034) 112223333, 11 2223333, +34 11-22-3333

Data quality web links

- Data quality - shows the major challenges addressed by Data Quality tools in a data warehousing environment. It descibes such topics as data correctness, consistency, completeness and validity.

- Data cleansing - the article provides definitions, loading techniques and source data selection considerations for the typical data cleansing processes.

Back to the Data Warehousing tutorial home